Quick & dirty prototypes¶

Micro:bit¶

The Micro:bit micro-controllers can communicate via radio frequencies between each other. I tried capturing frequencies from FM radio or talkie-walkie frequencies by looping through groups but nothing seemed to came up. Here is the Python code anyways:

Code

from microbit import *

import radio

# Code in a 'while True:' loop repeats forever

while True:

# A checkmark icon when booting the program

display.show(Image.YES)

sleep(1000)

# Turning radio frequencies on

radio.on()

# Looping from 0 to 255

for i in range(0,255):

# Changing group from 0 to 255

radio.config(group=i, power=0)

# Receiving a signal

message = radio.receive()

# If a message is received,

# a skull icon should appear on the LEDs

if message:

display.show(Image.SKULL)

sleep(400)

sleep(1000)

After this quick try, I was a bit dubious about the CCTV detection cape. The system used by !Mediengruppe Bitnik was a 2.4GHz radio frequency receiver. It is quite large (size of a hand), and I was dubious about its capacity to locate the camera in the space.

Therefore I contacted the French artist Benjamin Gaulon who made a project in 2008 revealing CCTVs images in the street on small screens right underneath their location. He answered me quickly that:

- CCTVs are closed-circuit systems impossible to dive in

- Most surveillance cameras nowadays don’t work with radio frequencies anymore but with IP systems through private wireless networks.

Based on those observations, I decided to abandon the CCTV detection wearable device to focus on digital camouflage.

Instagram filters¶

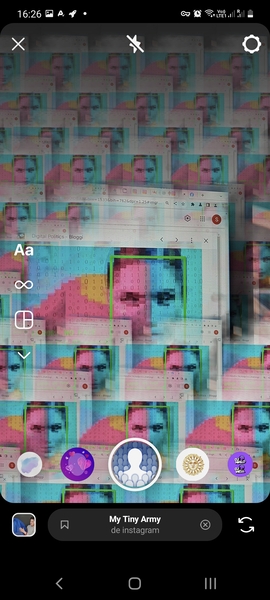

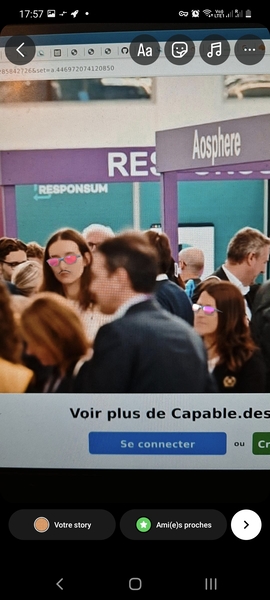

In order to know what patterns I should make for digital camouflage, I first need to understand what is recognized and what's not. As quick and dirty tests, I started with what I had available close to me: Instagram filters. I tried the filter by putting my laptop screen in front of the camera so that I could easily try different types of images. I made tests on pictures from Adam Harvey’s projects and from Rachele Didero’s Capable project.

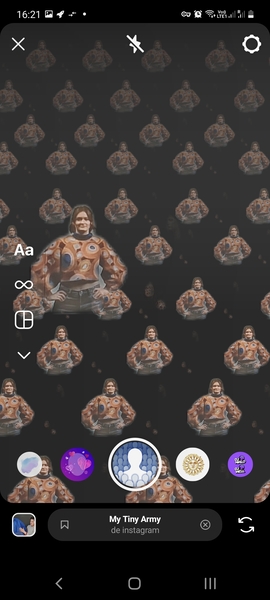

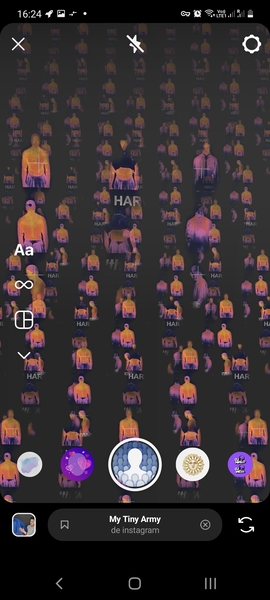

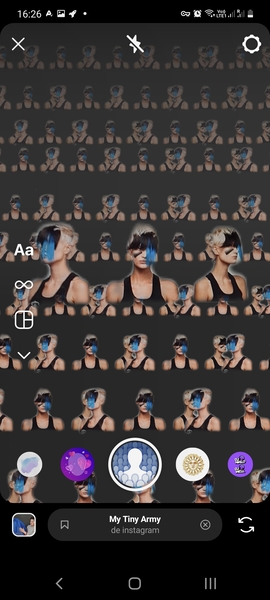

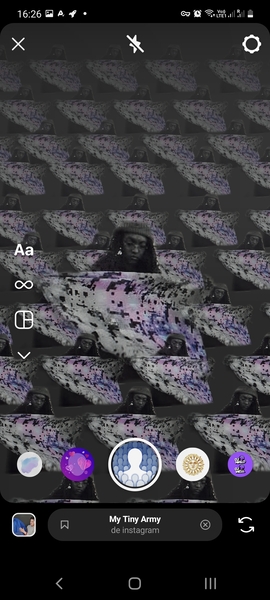

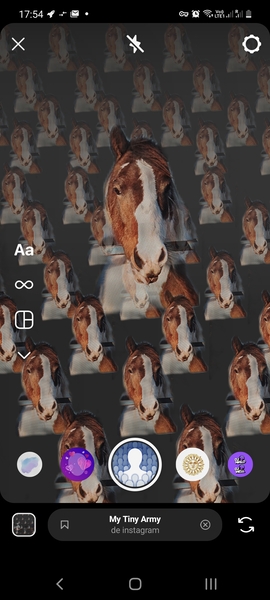

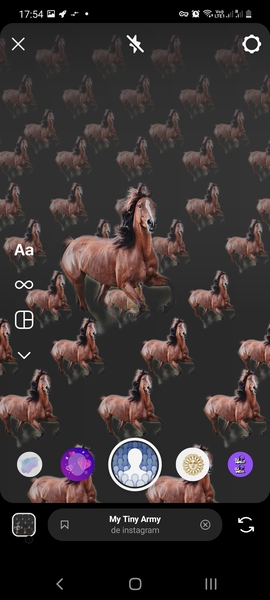

Filter: My Tiny Army¶

I used the filter «My Tiny Army» as some filters work only for one face/person at the same time. The filter could detect people on the Harvey and Didero’s work. But it worked also on animals and flowers, therefore we can conclude that the filter just extracts a foreground subject from the background. These results are thus non-relevant. It output funny patterns though.

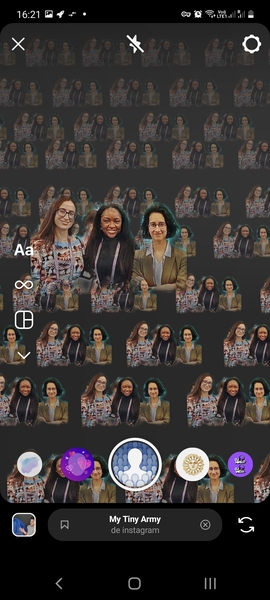

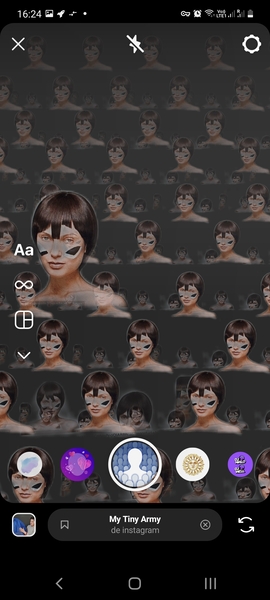

Filter: Juliet¶

I thus tried another filter for multiple faces. It worked on humans and not animals, but only with facing people and not from profile.

OpenCV¶

I just added an argument to execute the script while specifying a filename. You can execute the code like this where test-opencv.py contains the code below python test-opencv.py "myImage.jpg".

Code from https://www.datacamp.com/tutorial/face-detection-python-opencv

# Importing libraries

import cv2

import sys

import matplotlib.pyplot as plt

# Image filepath is specified when executing the script from the command line

imagePath = '%s' % sys.argv[1]

# Reads the image

img = cv2.imread(imagePath)

img.shape

# Converts to grayscale for faster process

gray_image = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Loading face classifier

face_classifier = cv2.CascadeClassifier(

cv2.data.haarcascades + "haarcascade_frontalface_default.xml"

)

face = face_classifier.detectMultiScale(

gray_image, scaleFactor=1.1, minNeighbors=5, minSize=(40, 40)

)

for (x, y, w, h) in face:

cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 0), 4)

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

plt.figure(figsize=(20,10))

plt.imshow(img_rgb)

plt.axis('off')

plt.show() # This line was missing from the original code, without it, it won't display the image.

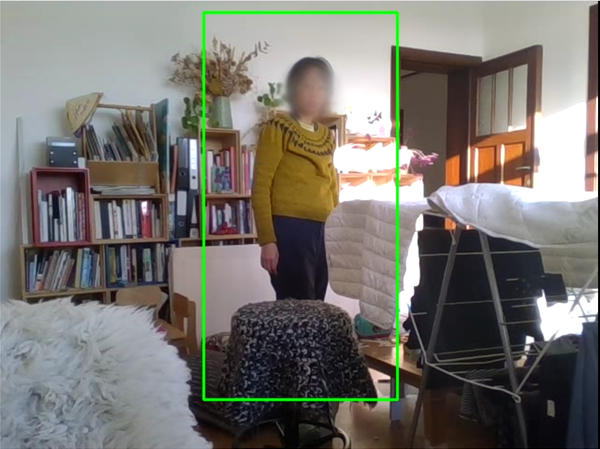

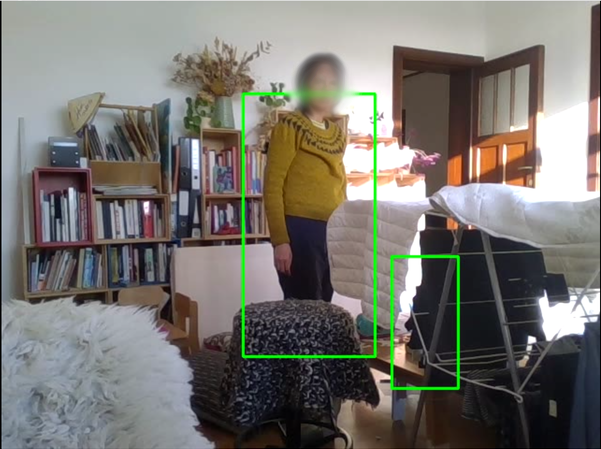

Human body detection with OpenCV¶

Code from https://thedatafrog.com/en/articles/human-detection-video/ taking a webcam as input. It works when the human is not too close from the camera. I am not documenting this code, I just copy/paste it like the original.

Code

# Code from <https://thedatafrog.com/en/articles/human-detection-video/>

# import the necessary packages

import numpy as np

import cv2

# import the necessary packages

import numpy as np

import cv2

# initialize the HOG descriptor/person detector

hog = cv2.HOGDescriptor()

hog.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

cv2.startWindowThread()

# open webcam video stream

cap = cv2.VideoCapture(0)

# the output will be written to output.avi

out = cv2.VideoWriter(

'output.avi',

cv2.VideoWriter_fourcc(*'MJPG'),

15.,

(640,480))

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

# resizing for faster detection

frame = cv2.resize(frame, (640, 480))

# using a greyscale picture, also for faster detection

gray = cv2.cvtColor(frame, cv2.COLOR_RGB2GRAY)

# detect people in the image

# returns the bounding boxes for the detected objects

boxes, weights = hog.detectMultiScale(frame, winStride=(8,8) )

boxes = np.array([[x, y, x + w, y + h] for (x, y, w, h) in boxes])

for (xA, yA, xB, yB) in boxes:

# display the detected boxes in the colour picture

cv2.rectangle(frame, (xA, yA), (xB, yB),

(0, 255, 0), 2)

# Write the output video

out.write(frame.astype('uint8'))

# Display the resulting frame

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture

cap.release()

# and release the output

out.release()

# finally, close the window

cv2.destroyAllWindows()

cv2.waitKey(1)

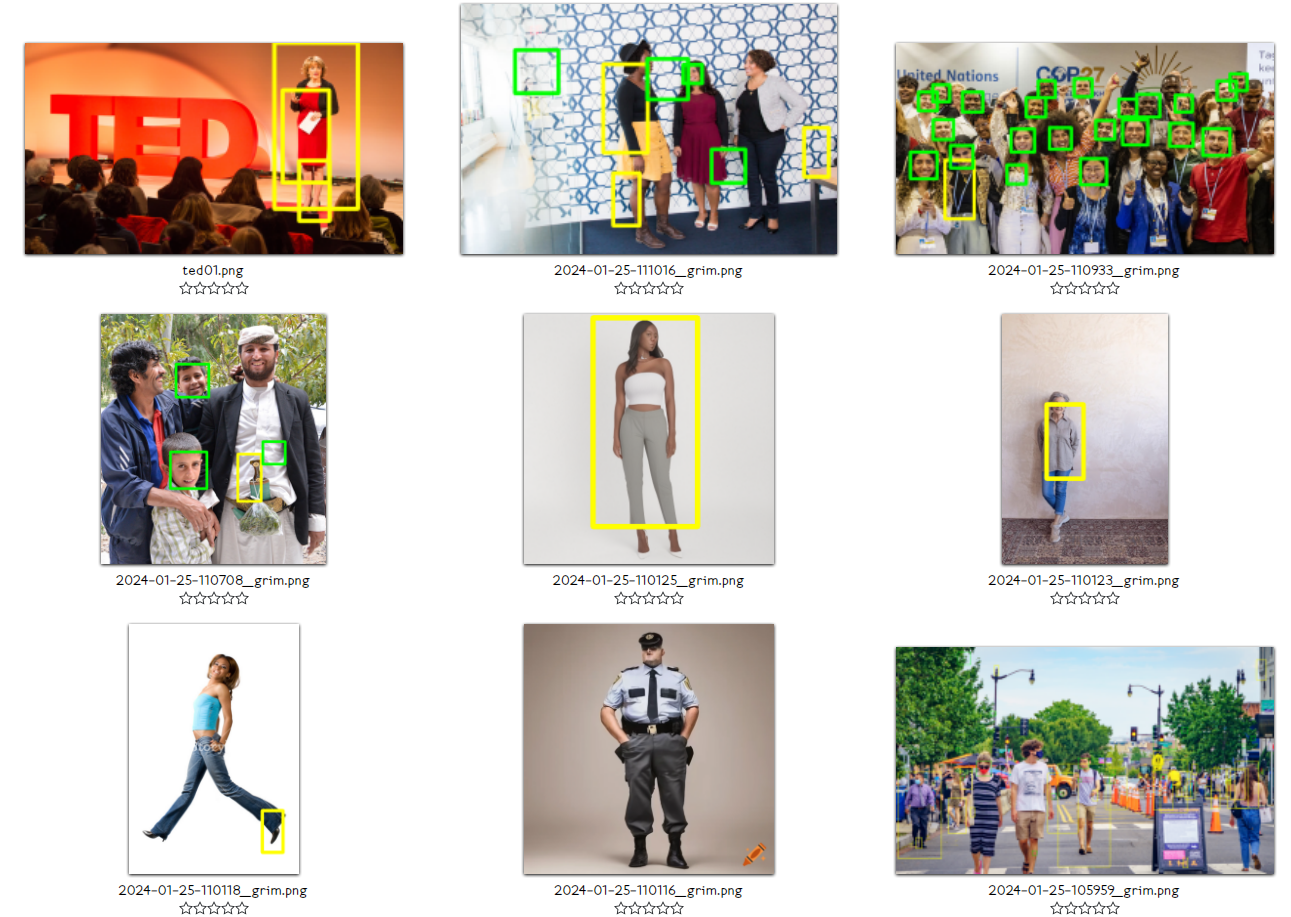

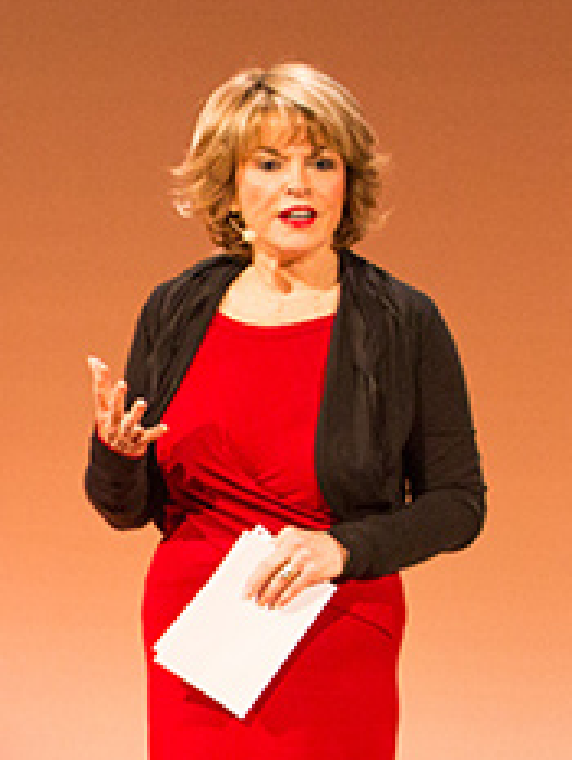

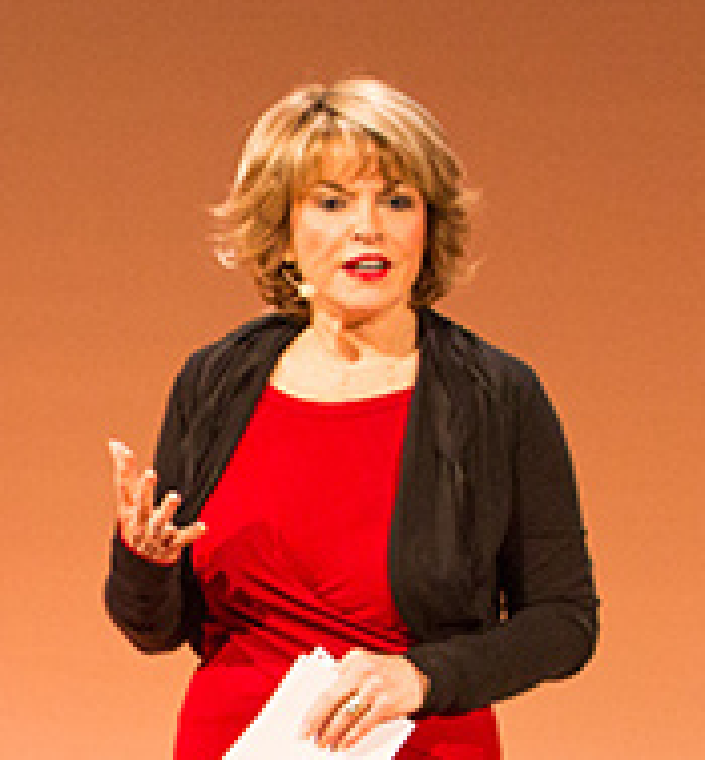

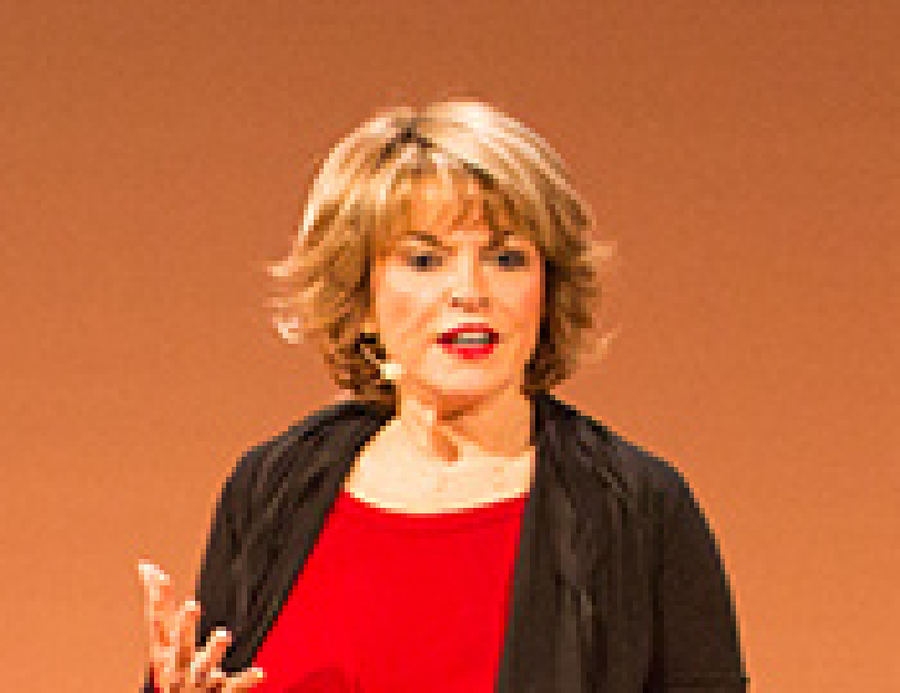

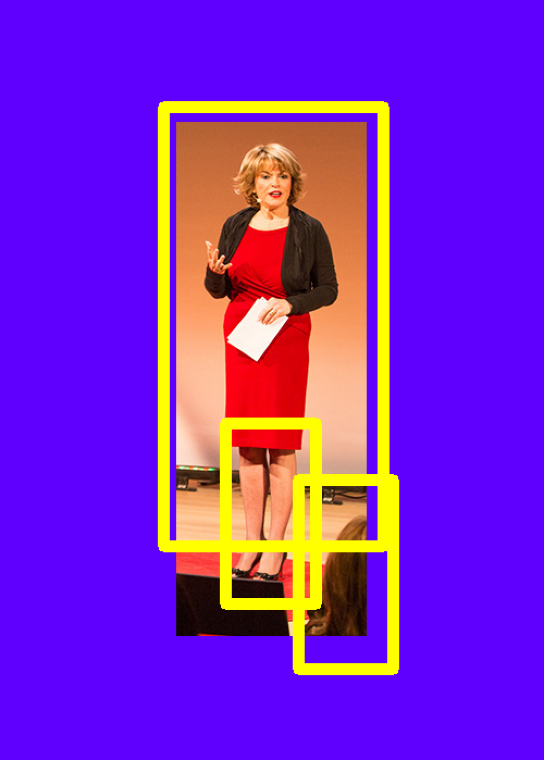

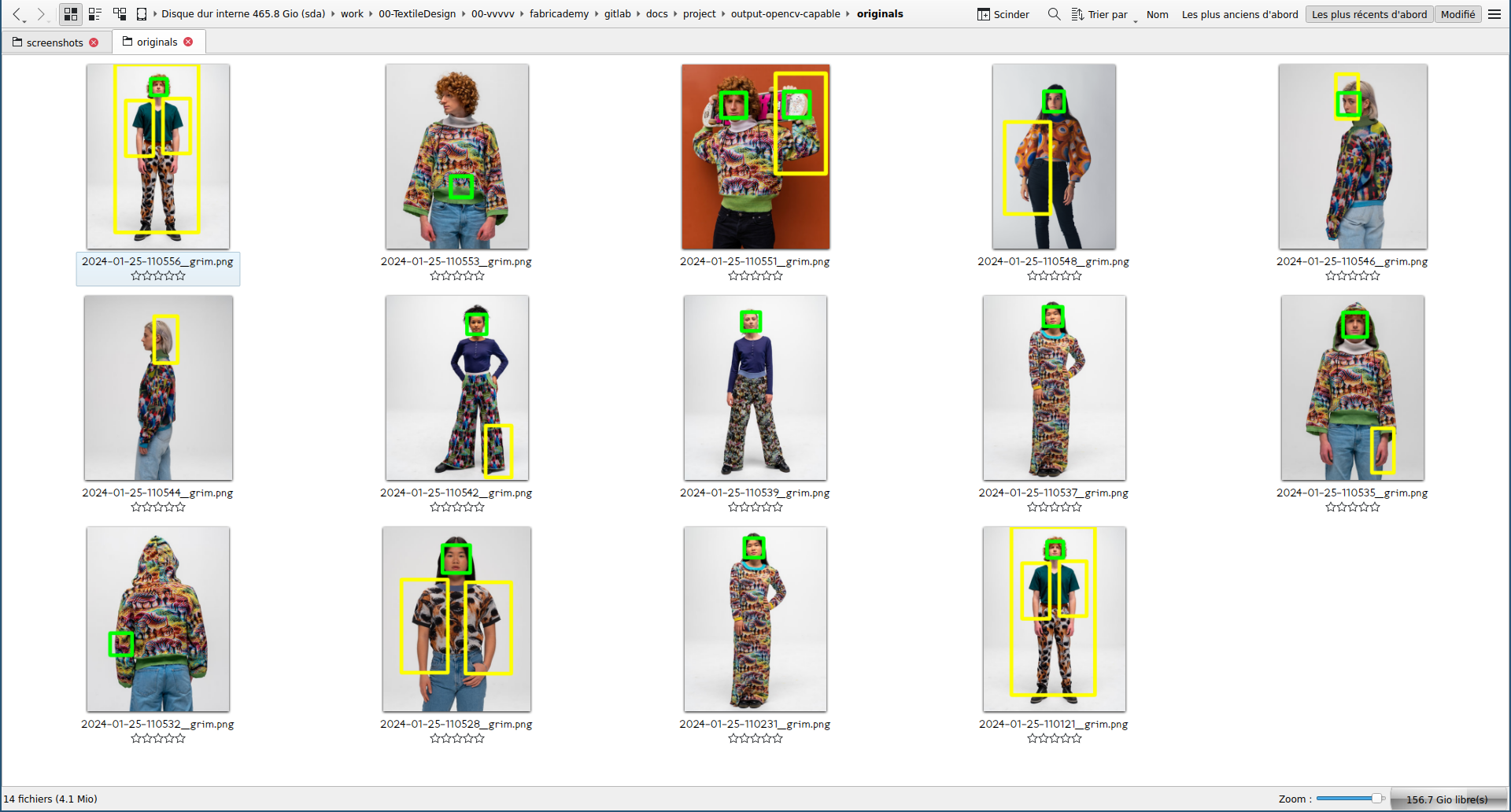

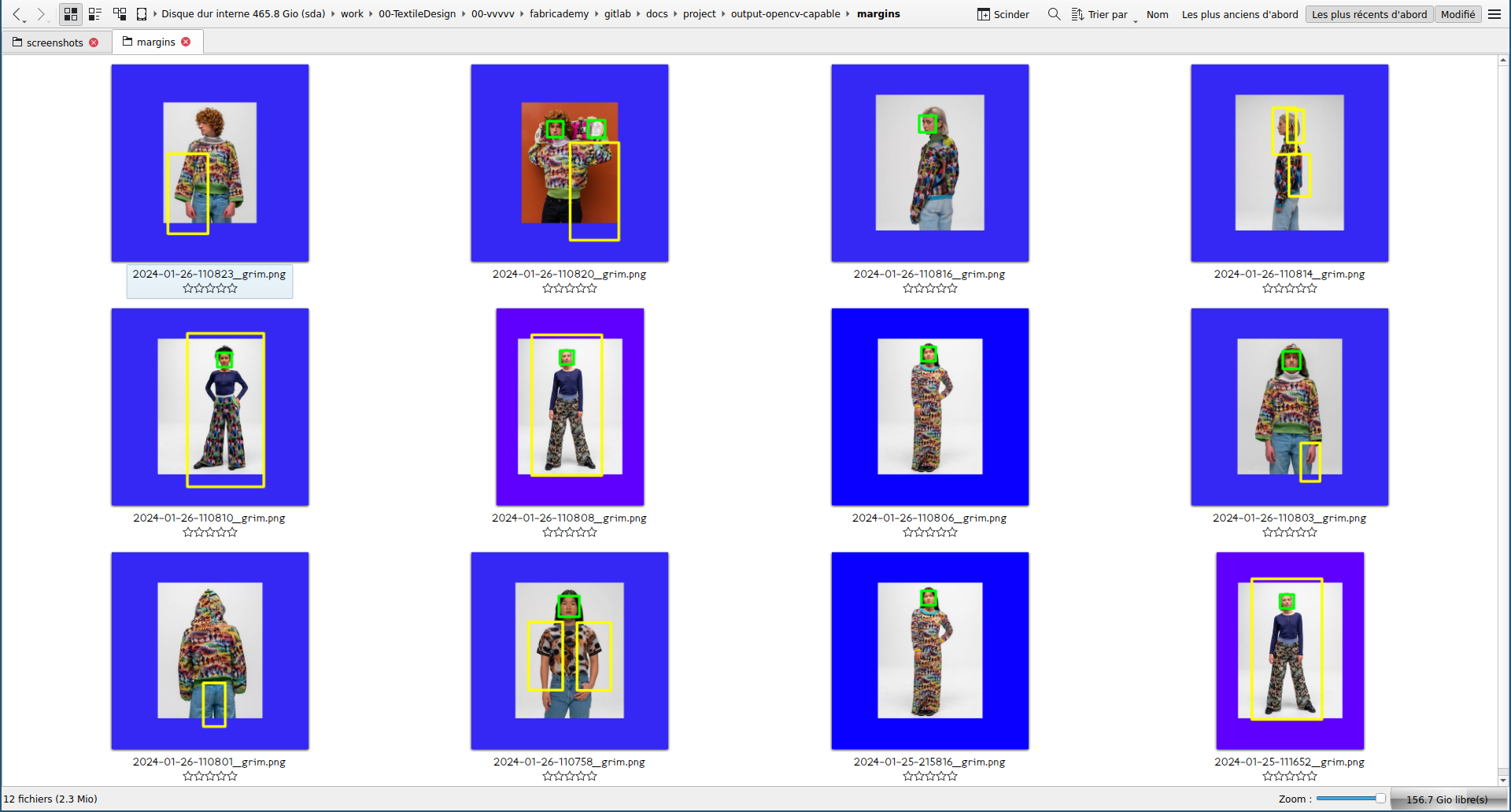

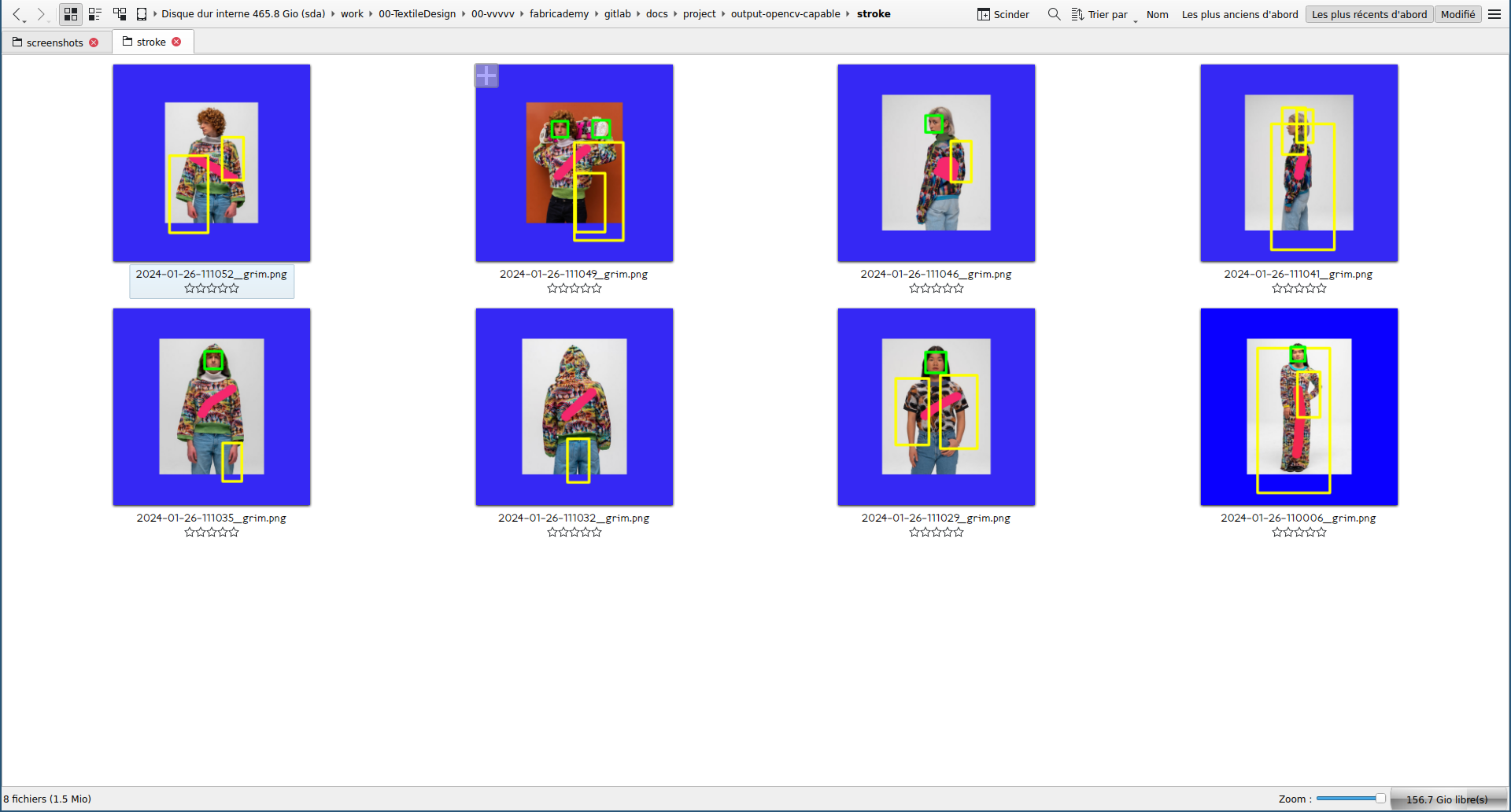

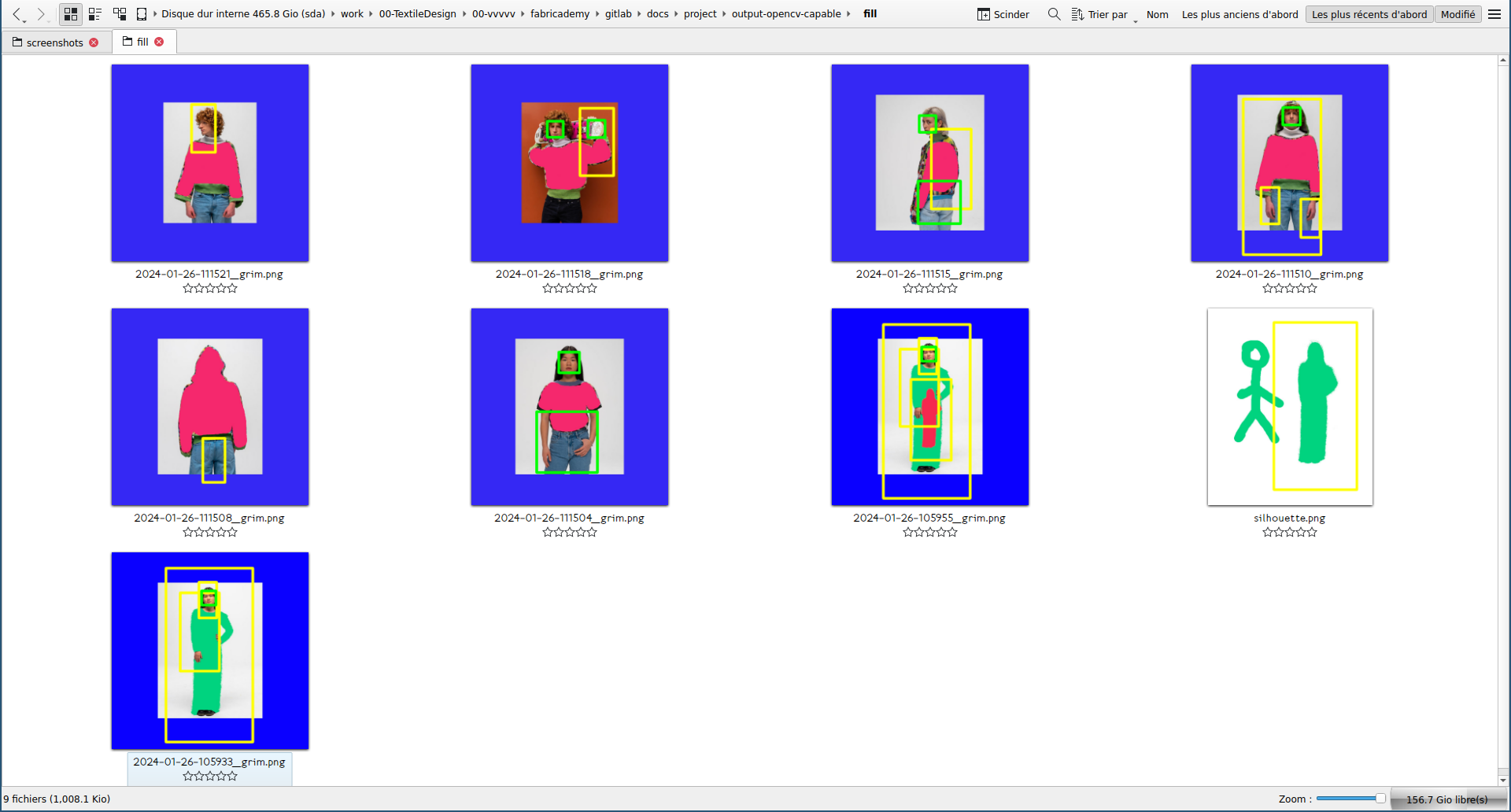

I mixed the former two codes to be able to detect human bodies but on still images. In green are detected faces and in yellow are detected bodies. I first started with random open source pictures from Google Images, then I thought it would be nice to use pictures of women in tech rather than random content.

Given the results, I wanted to check how much of the body is needed for the software to recognize a human. So I cropped a positive result to test that.

Tests on Capable.design pictures¶

Code

#! python

import cv2

import numpy as np

import sys, os

import pathlib

# initialize the HOG descriptor/person detector

hog = cv2.HOGDescriptor()

hog.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

# loads face classifier tool

face_classifier = cv2.CascadeClassifier(

cv2.data.haarcascades + "haarcascade_frontalface_default.xml"

)

# get input and output paths from the command line

# ./human-detection.py "../input/" "../output/"

inputPath_str = sys.argv[1]

inputPath = pathlib.Path(inputPath_str)

# Sets variables according if the input is a file or a folder

if inputPath.is_dir():

folder = True

images = os.listdir(r'%s' % inputPath)

else:

folder = False

outputPath = '%s' % sys.argv[2]

# Main function

def analyseImage(image, filename):

img = cv2.imread(image)

img.shape

# converts image to grayscale image for faster processing

gray_image = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# detect people in the image

boxes, weights = hog.detectMultiScale(gray_image, winStride=(8,8) )

# returns the bounding boxes for the detected objects

boxes = np.array([[x, y, x + w, y + h] for (x, y, w, h) in boxes])

for (xA, yA, xB, yB) in boxes:

# display the detected boxes in the colour picture

cv2.rectangle(img, (xA, yA), (xB, yB), (0, 255, 255), 2)

# detects faces in the image

face = face_classifier.detectMultiScale(

gray_image, scaleFactor=1.1, minNeighbors=5, minSize=(40, 40)

)

for (x, y, w, h) in face:

# display the detected boxes in the colour picture

cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 0), 2)

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Saves output images in outputPath folder

if folder and outputPath:

print(filename)

cv2.imwrite(filename, img)

# Shows output in a new window

else:

import matplotlib.pyplot as plt

plt.figure(figsize=(20,10))

plt.imshow(img_rgb)

plt.axis('off')

plt.show()

# If the input path is a folder

# applies script to all images

if folder:

for img in images:

analyseImage(inputPath_str + img, outputPath + img)

# Otherwise just treat one image and shows it

else:

analyseImage(inputPath_str, outputPath + inputPath_str)

To launch the script for several files at the same time, I used a simple bash loop:

for FILE in ../input-bodies/*; do python human-detection.py $FILE; done

References¶

- [ ] https://www.mdpi.com/1424-8220/20/2/342

- [x] https://www.vice.com/en/article/aen5pz/countersurveillance-textiles-trick-computer-vision-software

- [ ] https://github.com/sourabhvora/HyperFace-with-SqueezeNet

- [ ] https://pypi.org/project/knit-script

- [ ] OpenCV Cascade classifiers: https://docs.opencv.org/3.4/db/d28/tutorial_cascade_classifier.html

- PimEyes is a Face Search Engine Reverse Image Search. You can upload one or several photos of a face, and it will search the web for pictures of the same person.

Kniterate¶

In parallel to computer vision tests, I made some samples on the Kniterate, a semi-industrial knitting machine as I plan to knit my digital camouflages with this machine. See this page to look at those experiments.